Designers' eternal struggle is figuring out how to make software intuitive. I'm starting to see AI being applied to help with this. How?

A design is intuitive when we can match how the software works to how people think about the given concept/idea/problem. In design jargon, it's matching the conceptual model to the user's mental model.

Doing that is hard, mostly because people's mental models aren't entirely and reliably knowable. They also differ from person to person. And despite all the research and testing we do, our understanding of those mental models is imperfect.

But learning the mental model is only half of the work. Then we still have to design the product around it. We have to deal with tons of constraints. From wiring anything new to the existing product, to making sure the design is also feasible to build.

When we succeed, the result is an intuitive product. The reality, though, is that so much of today's software is still so hard to use.

Enter AI.

Recently I've seen two brilliant examples of employing AI to make a task super intuitive to do.

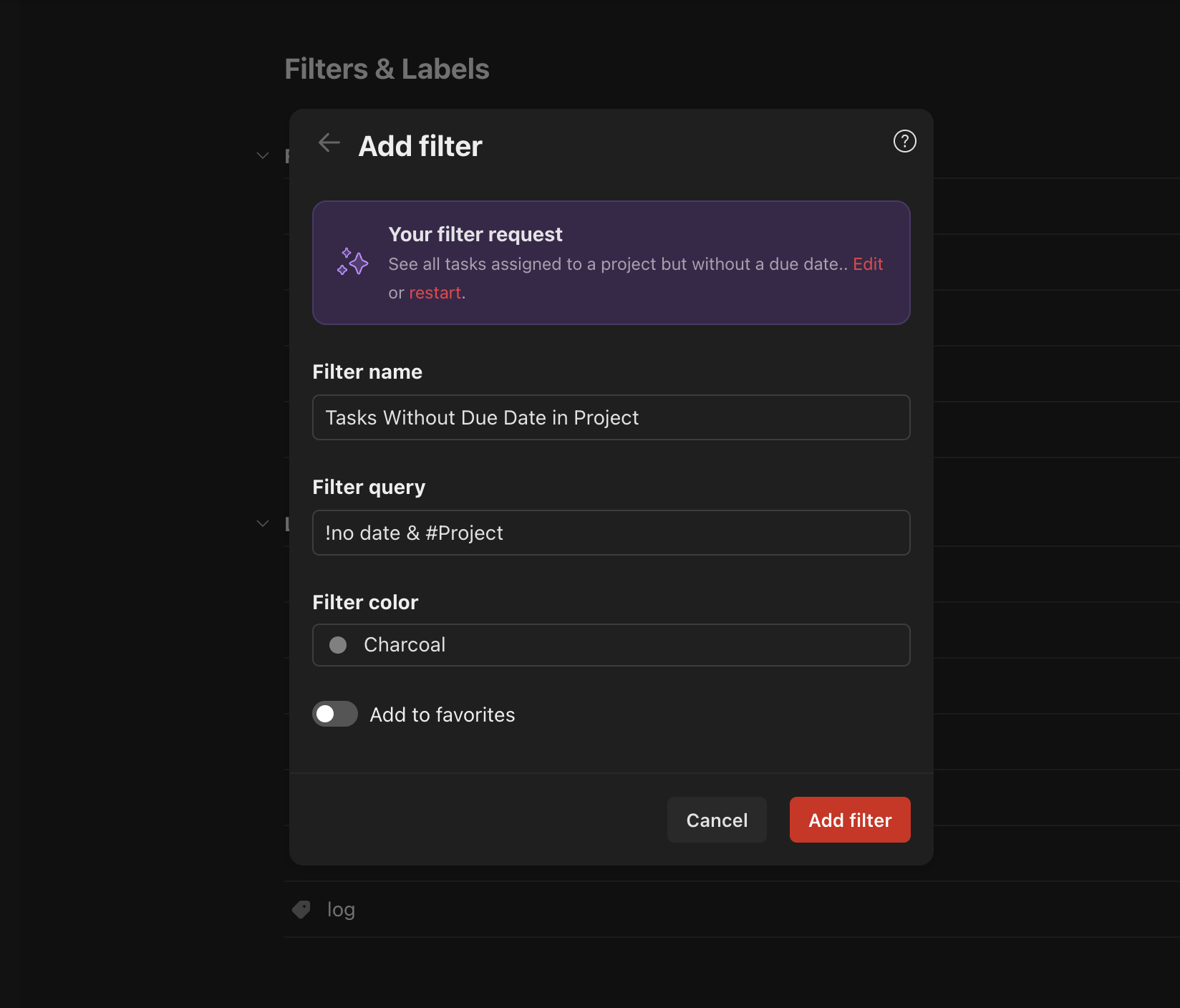

The first one is from Todoist, a task planner and one of my favourite products. They enabled users to filter with a natural-language request without having to use a complex and hard-to-learn query language. Here's a demo.

In this case, the user has a mental model of how filtering works. They also have a clear idea about what they need. But there's a big gap between that and the query language (i.e. the conceptual model).

Using AI bridges that gap. People can intuitively input their request without having to learn the complex query language.

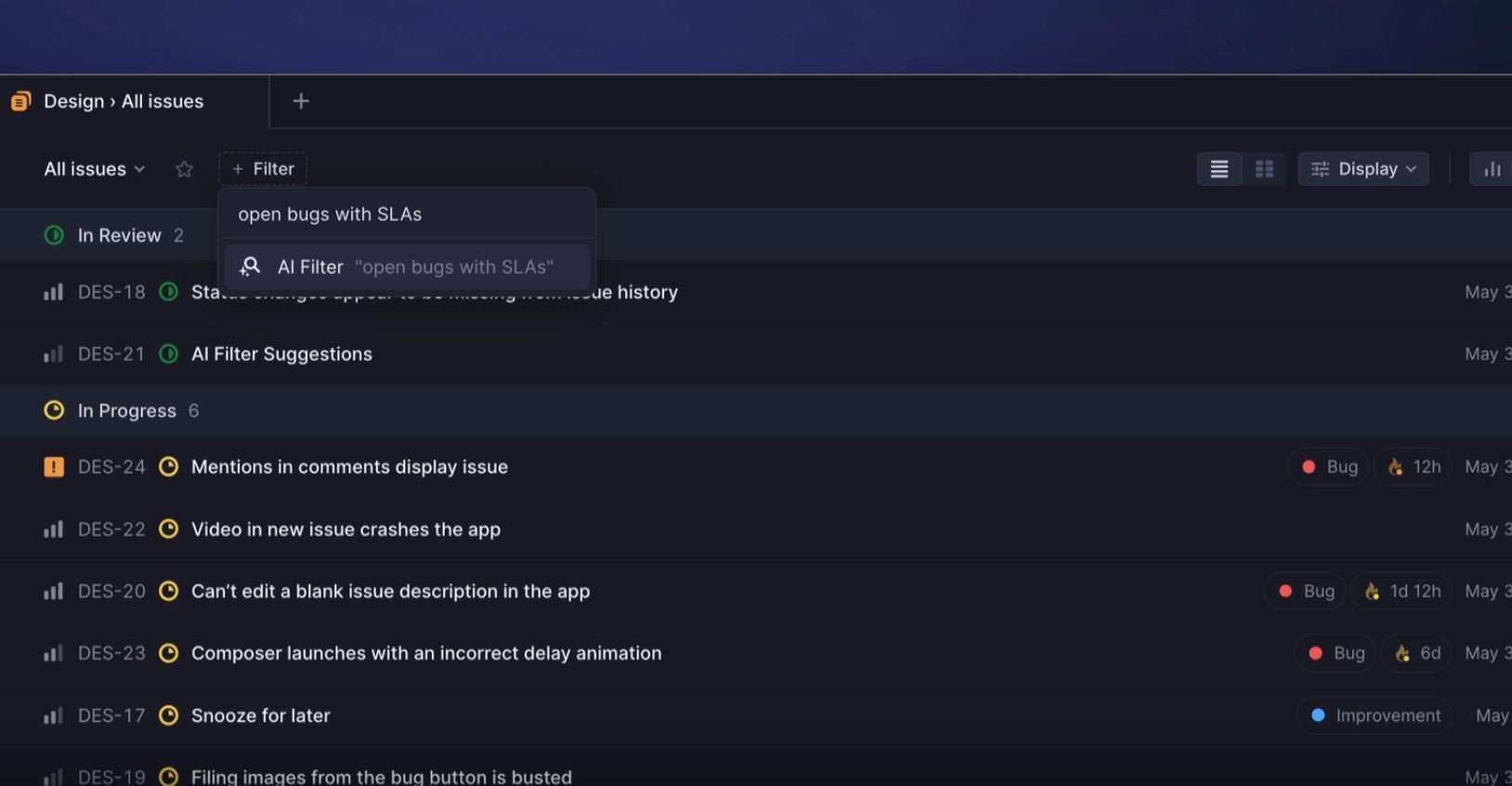

The second example is coincidentally also about filtering but comes from Linear. In their own words, "describe the issues or projects you want to see in a few words, and the corresponding filters will be applied using AI."

Here, the user avoids having to deal with a bunch of selects and dropdowns, not speaking of the nightmare that are logical operators in the case of more advanced requests.

Both examples above take user's raw input and translate it to the product's UI. It's more intuitive on it's own but it also carries the benefit of the user seeing how their mental model translates to the product's conceptual model.

AI is used as a link between the user's mental model and the product's conceptual model. Where previously gaps between the two models forced people to adapt to the product's UI and/or suffer using it, AI now allows us to overcome it more easily.

We have only looked at one use case but I believe there are tons of others where AI can be applied like this. I'm excited about the potential of making software way more intuitive this way.

How AI can make software more intuitive

Designers' eternal struggle is figuring out how to make software intuitive. I'm starting to see AI being applied to help with this. How?